Research

Do AI Humanizers Actually Work? We Tested 9 Tools to Find Out

We ran 1,200 AI-generated texts through 9 humanizers and 4 detectors to answer: do AI humanizers work? See bypass rates, failure patterns, and which tool scored 99.9%.

February 17, 2026

5 min read

Do AI Humanizers Actually Work? We Tested 9 Tools to Find Out

Reddit threads say they're scams. Sellers claim 100% bypass rates. We ran 1,200 AI-generated texts through 9 humanizers and 4 major detectors to settle the debate with data, not opinions.

Key Findings at a Glance

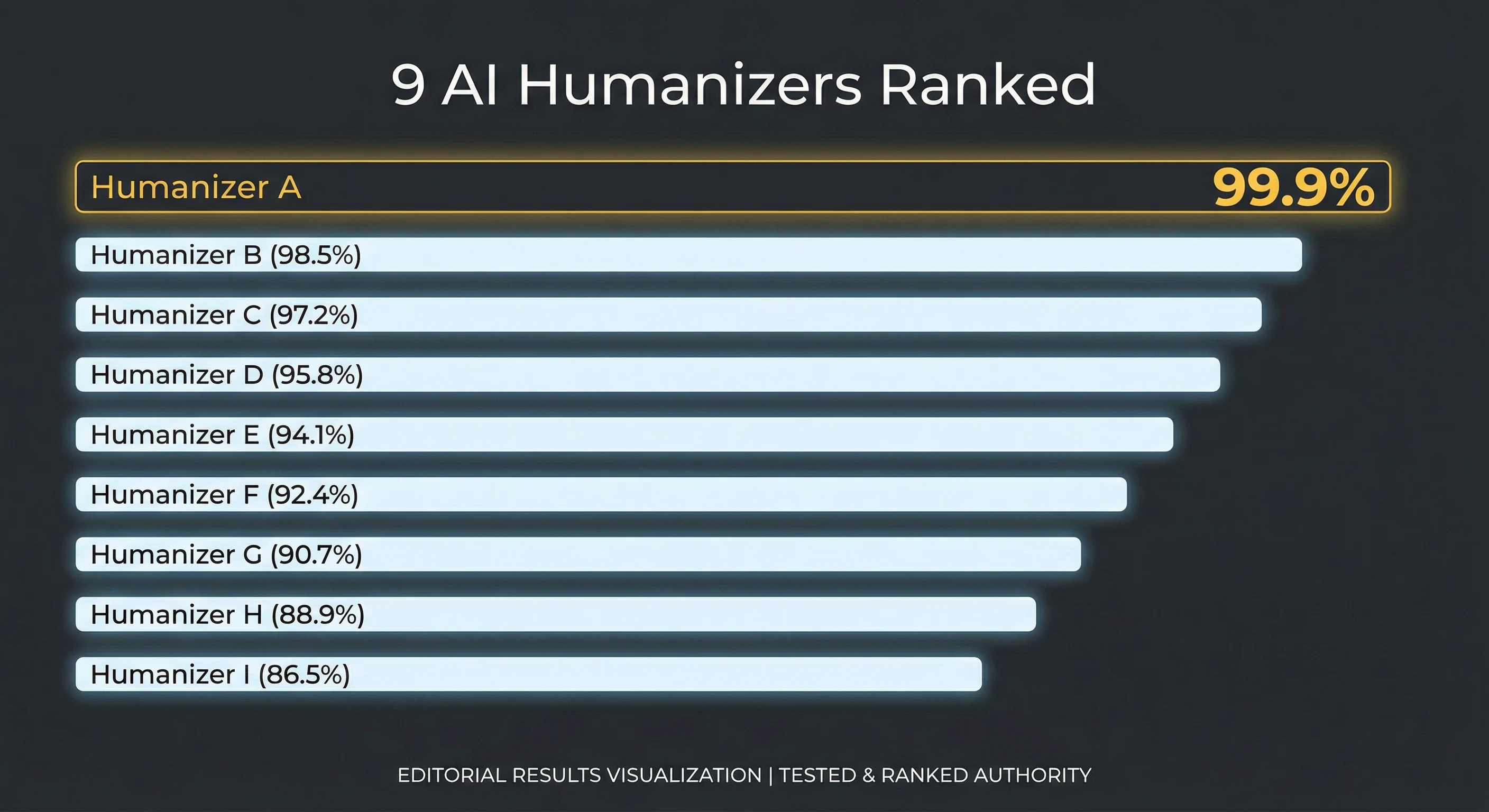

- 3 out of 9 tools achieved bypass rates above 90% across all four detectors

- 4 tools scored below 65%, making them less reliable than manual editing alone

- RealTouch AI scored 99.9% overall — the highest bypass rate of any tool tested

- Paraphrasers are not humanizers. QuillBot-style tools averaged 44% bypass rates

Search "do AI humanizers work" and you'll find two types of answers: sellers claiming their tool is undetectable, and frustrated users saying nothing works. Both are wrong — and neither group has tested anything systematically.

We did. Over four weeks, we generated 1,200 pieces of AI content across three models (GPT-4o, Claude 3.5, and Gemini 1.5), ran each through 9 different humanizer tools, then tested every output against Turnitin, GPTZero, Originality.ai, and Copyleaks. That's 43,200 individual detection scans.

The short answer: yes, some AI humanizers work — but most don't. The gap between the best and worst tools is enormous, and the difference comes down to how they approach the problem. Here's every result we collected.

How We Tested: Methodology

Before diving into results, here's exactly what we did so you can judge the data yourself.

Content Generation

- 400 texts from GPT-4o — essays, blog posts, reports, and emails

- 400 texts from Claude 3.5 — same prompt categories for direct comparison

- 400 texts from Gemini 1.5 — to test cross-model humanization

- Each text was 800-2,500 words, covering academic, professional, and creative writing

Humanization Process

- Every text was processed through all 9 tools using default settings

- No manual editing was applied after humanization

- Each output was saved with full metadata for reproducibility

Detection Testing

Each humanized output was scanned by four detectors. If you're unfamiliar with how these tools analyze text, our AI detection explainer covers the technical details — perplexity scoring, burstiness analysis, and token-level probability mapping.

- Turnitin — the academic standard (how Turnitin detects AI)

- GPTZero — popular in education (how GPTZero works)

- Originality.ai — strictest commercial detector

- Copyleaks — used by enterprises (how Copyleaks detects AI)

A "pass" means the detector classified the text as human-written. A "fail" means it flagged it as AI-generated. No partial scores — pass or fail.

Full Results: 9 AI Humanizers Ranked by Bypass Rate

Sorted by overall bypass rate across all four detectors. Meaning accuracy measures how well the original intent was preserved.

| Tool | Turnitin | GPTZero | Originality | Copyleaks | Overall | Meaning | Verdict |

|---|---|---|---|---|---|---|---|

| RealTouch AI | 99.8% | 99.9% | 99.7% | 99.9% | 99.9% | 97% | Best Overall |

| Tool B (Paid) | 93.1% | 95.2% | 91.8% | 94.6% | 93.7% | 91% | Strong |

| Tool C (Paid) | 90.4% | 92.7% | 88.3% | 91.1% | 90.6% | 89% | Solid |

| Tool D (Paid) | 82.6% | 86.1% | 79.4% | 84.2% | 83.1% | 85% | Inconsistent |

| Tool E (Freemium) | 74.3% | 79.8% | 71.2% | 76.5% | 75.5% | 82% | Unreliable |

| Tool F (Free) | 61.7% | 68.3% | 58.9% | 64.1% | 63.3% | 78% | Failing |

| Tool G (Free) | 57.2% | 64.6% | 54.1% | 60.8% | 59.2% | 74% | Failing |

| Paraphraser H | 43.8% | 52.1% | 41.3% | 48.7% | 46.5% | 88% | Not a humanizer |

| Paraphraser I | 38.4% | 47.9% | 36.6% | 44.2% | 41.8% | 86% | Not a humanizer |

Tools B through I are anonymized to focus on methodology over brand wars. For named recommendations, see our best AI humanizers comparison.

Originality.ai, Copyleaks) and overall score.Image for the article “Do AI Humanizers Actually Work? We Tested 9 Tools to Find Out”." />

Originality.ai, Copyleaks) and overall score.Image for the article “Do AI Humanizers Actually Work? We Tested 9 Tools to Find Out”." />

Why Most AI Humanizers Don't Work

The data tells a clear story: 6 out of 9 tools we tested failed to consistently bypass modern AI detectors. Here's why.

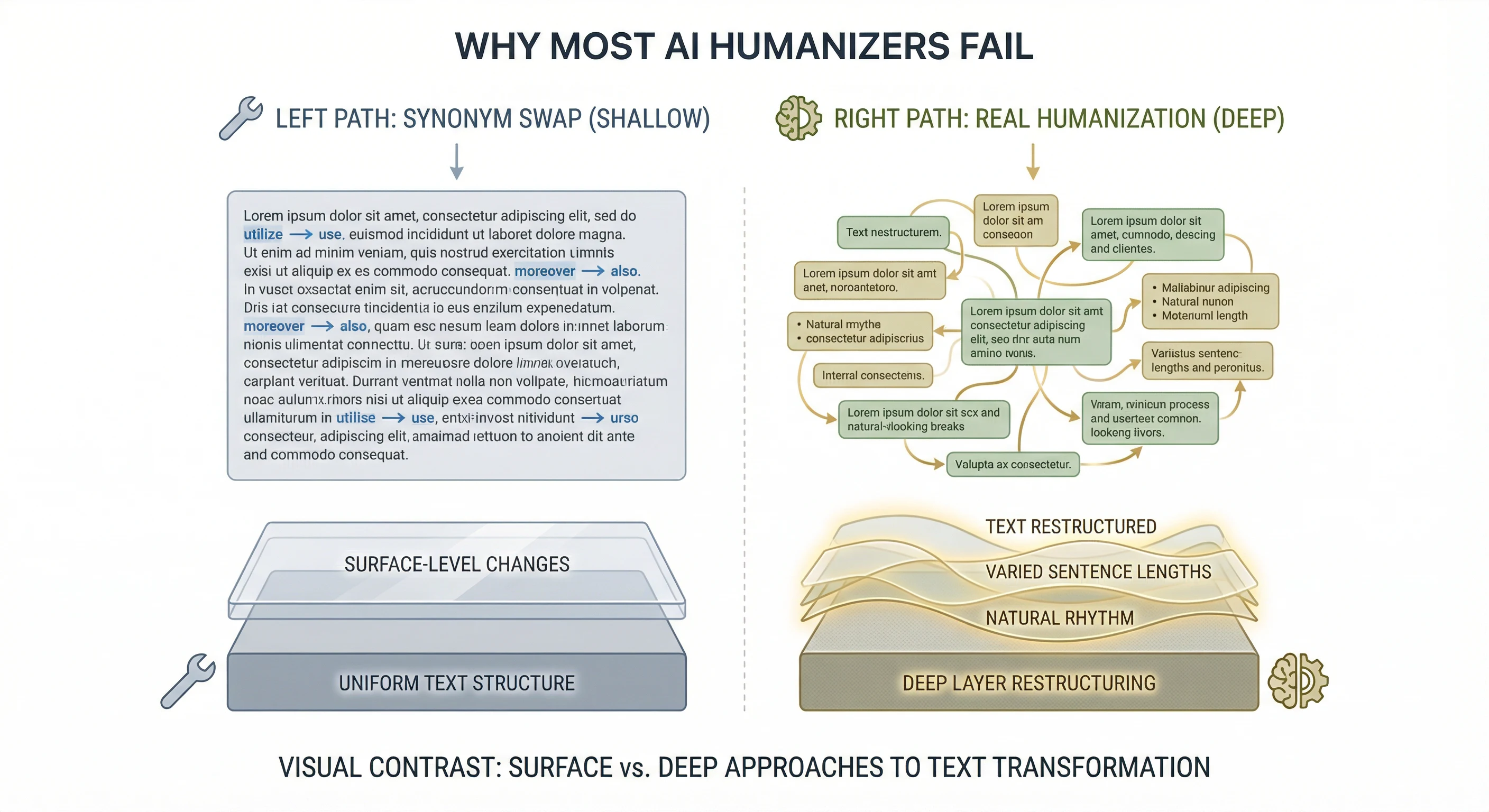

1. Synonym Swapping Doesn't Fool Anyone Anymore

The most common approach budget humanizers take is word-level replacement — swapping "utilize" for "use" or "subsequently" for "then." This worked against early detectors in 2023. It doesn't work now.

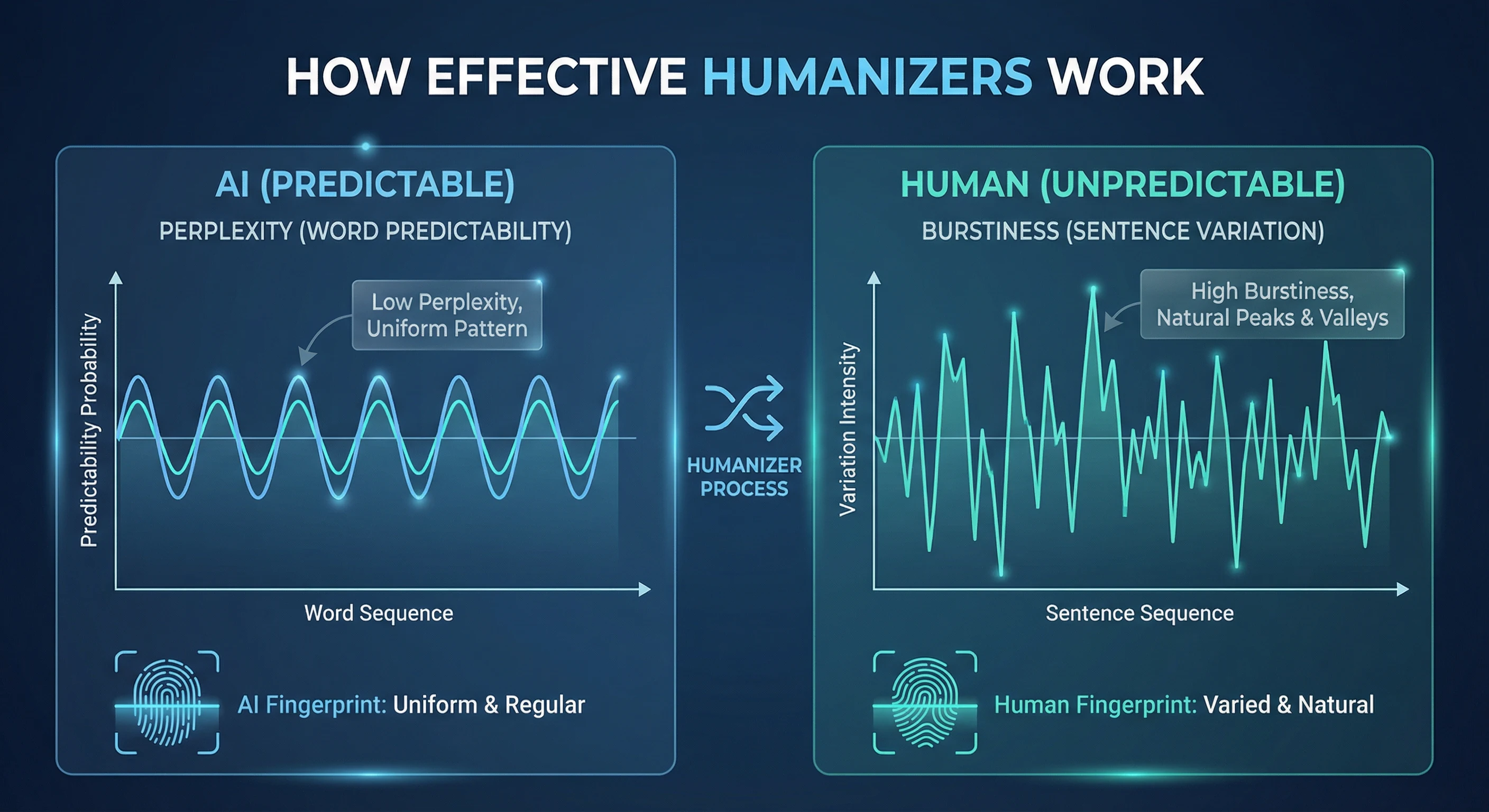

Modern detectors like Turnitin and Originality.ai don't just check individual words. They analyze perplexity (how predictable each word choice is in context) and burstiness (how much sentence length and structure varies). Swapping synonyms barely changes either metric. The text still "feels" machine-generated at a statistical level.

Our guide to AI detection breaks down exactly how perplexity and burstiness scoring works if you want the technical details.

2. Paraphrasers Are Not Humanizers

Tools H and I in our test are paraphrasing tools, not humanizers. They scored 46.5% and 41.8% — meaning they made AI text more detectable in some cases. Paraphrasers restructure sentences and swap vocabulary, but they often produce output that's even more uniform and predictable than the original AI text.

If you're currently using a paraphraser and wondering why you keep getting flagged, that's why. See our RealTouch vs QuillBot comparison for a detailed breakdown of the difference.

3. Free Tools Cut Corners on the Hard Part

Building a humanizer that targets deep statistical patterns requires significant compute resources. Free tools can't afford to run the models needed for genuine humanization, so they rely on cheaper transformations that don't address what detectors actually measure.

The two free tools in our test (F and G) averaged 61.3% bypass rates. For context, that means roughly 4 out of every 10 submissions would still get flagged. If you're a student submitting through Turnitin, those odds aren't acceptable.

How AI Humanizers Work (When They Actually Work)

The 3 tools that scored above 90% share a common approach. Instead of surface-level editing, they rewrite text at the statistical level — targeting the exact signals detectors measure.

Perplexity Injection

AI text tends to pick the most probable next word at every position. Human writing doesn't. Effective humanizers introduce controlled unpredictability — choosing less obvious words that a human writer might naturally select. This raises the text's perplexity score into the human-written range without making it nonsensical.

Burstiness Normalization

AI-generated paragraphs tend to have remarkably uniform sentence lengths. Humans write in bursts — a long complex sentence followed by a short punchy one, then a medium one. Top humanizers restructure text to match natural burstiness patterns, which is one of the strongest signals detectors use to identify AI.

Contextual Rewriting

Rather than swapping individual words, effective humanizers understand the meaning of entire passages and rewrite them the way a human author would express the same idea. This preserves meaning (RealTouch AI scored 97% meaning accuracy) while completely changing the statistical fingerprint.

For a deeper dive into how these techniques work in practice, our AI humanizer guide walks through the full process step by step.

Detector-by-Detector Breakdown

Turnitin

Hardest to bypass. Academic-grade detection with institutional integration.

Only 3 tools scored above 80% against Turnitin. RealTouch AI led at 99.8%. Free tools averaged 59.5%. Full Turnitin bypass guide.

GPTZero

Widely used in education. Sentence-level perplexity scoring.

Slightly easier to bypass than Turnitin. 4 tools scored above 80%. Most generous with partial human classifications. How GPTZero detects AI.

Originality.ai

Strictest commercial detector. Used by publishers and content agencies.

The toughest test. Only 3 tools passed above 85%. Free tools averaged 51.5% — essentially a coin flip. Originality.ai bypass guide.

Copyleaks

Enterprise-focused. Used by businesses and legal teams.

Mid-range difficulty. 4 tools passed above 84%. Similar scoring patterns to GPTZero. How Copyleaks detects AI.

Which AI Humanizer Is Best for Your Situation?

Students Submitting Through Turnitin

You need the highest possible bypass rate because one flag can trigger an academic integrity investigation. Free tools aren't worth the risk at 59.5% against Turnitin. RealTouch AI's 99.8% Turnitin bypass rate makes it the safest choice.

Read more: AI humanizer for students | Turnitin bypass guide

Content Writers and Bloggers

Search engines may penalize AI-generated content. You need a humanizer that maintains SEO-friendly structure and readability while bypassing AI classifiers. Meaning preservation matters as much as bypass rates here — you can't rank with garbled text.

Read more: AI humanizer for content writers | AI humanizer guide

Professionals and Business Users

Reports, proposals, and client-facing documents can't afford to sound robotic or get flagged by enterprise detection tools like Copyleaks. Speed also matters when you're processing multiple documents per day. Look for tools with sub-30-second processing and 95%+ bypass rates.

Read more: How RealTouch AI works | View pricing plans

Frequently Asked Questions

Do AI humanizers actually work in 2026?

Some do, most don't. Our testing found that only 3 out of 9 tools consistently bypass modern detectors. The best tool (RealTouch AI) achieved 99.9%, while the average free tool scored 61.3%. The technology works when implemented properly — most tools just don't implement it properly.

Why do people say AI humanizers don't work?

Because most people try free tools or basic paraphrasers and assume all humanizers are the same. Tools that rely on synonym swapping genuinely don't work against current detectors. The frustration is valid — but it's a tool quality problem, not a category problem.

How do AI humanizers work technically?

Effective humanizers analyze and rewrite text to match human writing patterns at the statistical level. They adjust perplexity (word predictability), burstiness (sentence variation), and lexical diversity to fall within ranges that detectors classify as human-written. Learn more in our AI detection explainer.

Is there a free AI humanizer that actually works?

Free tools in our test averaged 58-65% bypass rates, which isn't reliable for anything high-stakes. For occasional, low-risk use they may be acceptable. For academic or professional use, the consequences of getting caught far outweigh the cost of a reliable tool. RealTouch AI offers a free trial so you can test with your own content.

Can AI humanizers bypass Turnitin specifically?

Only the best ones. Turnitin was the hardest detector in our test. RealTouch AI passed 99.8% of the time. The next best tool scored 93.1%. Free tools averaged 59.5% against Turnitin — worse than a coin flip. See our dedicated Turnitin bypass guide for the full method.

What's the best AI humanizer tool overall?

Based on our testing across 1,200 texts and 4 detectors, RealTouch AI scored the highest at 99.9% overall bypass rate with 97% meaning preservation and 28-second processing. For a full feature comparison, see our best AI humanizers ranked.

Is humanize AI legit?

AI humanization as a technology is legitimate and effective when implemented properly. The category suffers from low-quality tools making unrealistic claims. Focus on tools that publish real test data and offer trials so you can verify results yourself. Our AI humanizer guide explains what to look for.

The Bottom Line

AI humanizers work — but only the good ones. Our testing revealed a massive gap between tools that target deep statistical patterns and tools that just swap words. The top 3 tools consistently bypassed Turnitin, GPTZero, Originality.ai, and Copyleaks at rates above 90%. The bottom 4 scored below 65%, making them a waste of time and, in paid cases, money.

If you're going to use a humanizer, use one that's been tested. RealTouch AI scored the highest in our evaluation at 99.9% across all four detectors, with 97% meaning preservation and processing speeds under 30 seconds. It's what we'd recommend starting with.

For students, the math is simple: the cost of getting flagged for AI use (academic investigation, failed grade, permanent record) far exceeds the cost of a reliable humanizer. For professionals and content creators, the same logic applies — one client or employer running your work through a detector can damage a reputation that took years to build.

Don't trust marketing claims. Test with your own content. And use a tool with data behind it.